Doki Doki is a responsive, emotive wearable. It was created during the spring / summer of 2020 as a speculative, modular garment that explores forms of nonverbal communication. In particular cues emerging as a reaction to the changes we are facing to our usual rituals of social interaction during the covid pandemic, when faces and micro-expressions may be obscured by the wearing of protective face masks. The garment investigates how data can be visualised both covertly and/or overtly during social and other encounters to communicate information to observers about the wearer, and their environment. But also how data can be conveyed as ‘secret languages’ or signals.

Doki Doki was exhibited as part of the Design Exhibition of the 2021 ACM International Symposium on Wearable Computers (ISWC) and its accompanying paper can be downloaded from here.

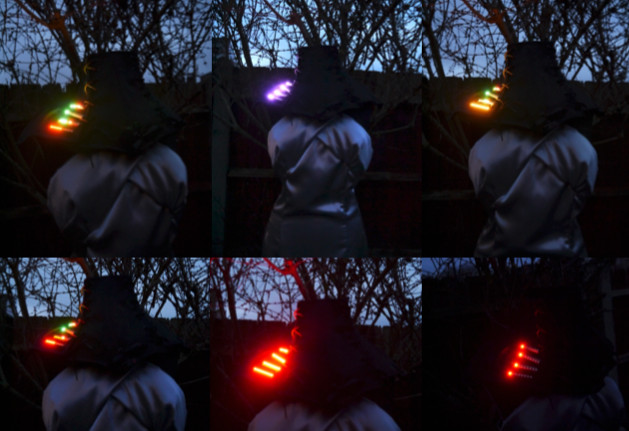

The device uses pattern and colour in the form of four RGB LED NeoPixel bars to visualise data from sensors affixed to a bespoke neck corset. Firstly, the garment functions as a three column binary clock, displaying hours, minutes and seconds. Compared to the 12 hour clock face or numerical 12/24 hour displays, binary is a rarely used method of presenting time in a visual format, and thus is indecipherable by many. This reminds us that there are differing approaches we can use for presenting ubiquitous data.

On automatic mode, the LED display periodically switches from visualising the time to amplifying emotive and environmental data. This is in the form of changing heart rate (gleaned from an ear sensor) of the wearer as they respond to social and situational interaction and may be interpreted by the observer as emotive data. When the display is not broadcasting time or heart rate data, it projects spatial data as proximity detection (using an infra red sensor), in relation to the personal space of the wearer. For example, indicating the closeness of someone approaching (in daylight or darkness) and by using LED feedback as a ubiquitous traffic light colour signalling sequence, to warn people if they are getting too near. The wearer can choose, via a button press, between a timed cycling of all data outputs on the display or choosing to display one of the three data outputs singularly. This option may be appropriate when emotive privacy, focus on social distancing or personal boundaries are required.

In terms of the garment itself, it explores aesthetics, repurposing and sustainability, via a modular plug and play design ethos. The neck collar is made up of four separate boned corset pieces, using traditional corsetry methods and fabrics, which allow for the wearer to tailor the garment to their body for comfort and change its materiality, purpose and aesthetic as required. For example, the corsetry lacing of the garment grants it to be loosened so it may be worn over layers of clothing, or simply by itself. The functionality of the garment can be repurposed, as the sensors and actuators can be swapped and changed, according to the wearer’s bespoke requirements. This is done by the creation of a bespoke plug and play circuit board, and the functionality in the C programming can be easily reprogrammed and updated if the user is familiar with coding.

Using wearables in this way may contribute to the discussion of how and why we might advance the use of covert and overt data on the body to create nonverbal cues and secret languages. Especially, for these devices to be informed by and then react to physiological and environmental situations, and to then aid us in social interaction during the pandemic. But also beyond, as the pandemic requires us to investigate communicating and socialising in different ways it also drives forward the evolution and embodiment of technology. Possibly leading to future acceptance of prominently worn devices on the body where in the past it has been rejected.

The next iteration of this project is already underway and includes PCB design and production for streamlining the device’s circuit layout. It also includes an extra mode button. This is necessary as having a single button for all the device’s functionality was not particularly user friendly. The second button will allow differentiation between visualisation modes and data recording. This will be in terms of integrating record and playback functionality, plus for downloading and tracking one’s time-stamped emotive and spatial data. It will allow the analysis of encounters and emotive reactions that can be privately saved for later reflection. Moreover, the use of record and playback allows for the continued ethical discussion around ‘emotive engineering’ where the recording and playback of personal data can be used to influence or change a situation or its outcome.